Using Azure Functions with Blob Storage to Process incoming Data

What even is Blob Storage?

When it comes to storing data in Azure, the first service that comes to mind is Azure Blob Storage. Blob Storage falls under Azure's Storage account and is one of the four data storage options (table, queue, file, blob) that these accounts offer. The two main pricing tiers for an Azure Storage account are Standard and Premium, with premium being best practice for a production or enterprise level environment. I'll be sure to make another post more in depth on Azure Storage Accounts later, but for now lets dive into the service we will be using today.

Blob Storage specifically allows data of all different types to be stored as a blob, which is for analogy sake is the equivalent of a file on a windows filesystem. Unlike a windows file system, Blobs do not use a hierarchy system with nested folders. Instead Blob Storage utilizes containers which separate and organize blobs. As many native Azure services typically do, Blob Storage offers high security and delegation with Microsoft Entra ID RBAC, SAS (Shared Access Signatures), lifecycle management, and many other features.

The reason that I am using Blob Storage today is to store information outputted from an Azure Function.

Azure Functions - what?

An Azure Function is a serverless solution that allows data processing through event-driven triggers and bindings that are highly customizable. Now when I say serverless, specifically I'm talking about the consumption hosting plan which charges you on a pay-as-you-go basis rather than on predefined specification. Today I'll be using the consumption plan, however although I won't go in depth on this, here are the other hosting options for Azure Functions:

- Flex Consumption

- Premium

- Dedicated

- Container Apps

- Consumption (as I already mentioned)

For more info on the other plans check out Microsofts actual documentation listed below: Azure Functions scale and hosting | Microsoft Learn

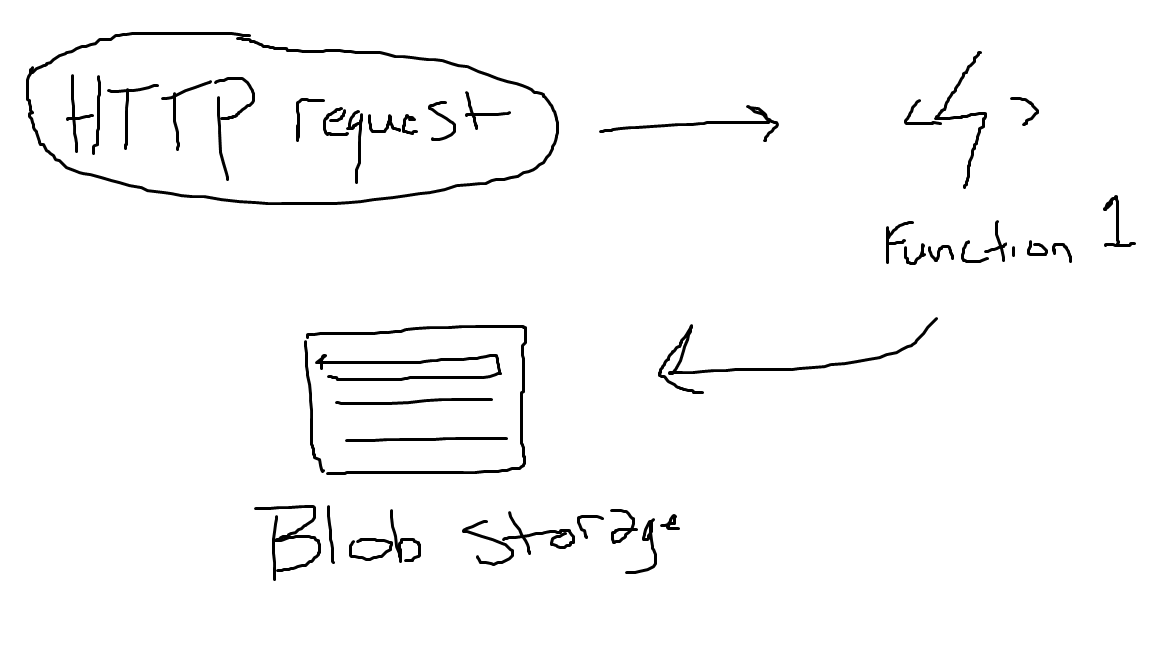

I am going to be using an Azure Function with an HTTP trigger and Blob Storage Output binding in order to process data from HTTP requests and store it in Blob Storage.

Setting up our infrastructure

I've been trying to improve my C# skills so I thought it'd be best to set up our resource group, storage account and container in a Console App.

- Create a Console App in Visual Studios 2022

- Here's the code that I'm going to be using to build out our resources:

// This script provisions a resource group and storage account using Azure.ResourceManager SDK.

// It does not deploy the Azure Function itself, use this to prepare the infrastructure first.

using Azure;

using Azure.Core;

using Azure.Identity;

using Azure.ResourceManager;

using Azure.ResourceManager.Resources;

using Azure.ResourceManager.Storage;

using Azure.ResourceManager.Storage.Models;

//This works for me because I am logged in to Visual Studios with an account connected to my Azure Subscription

ArmClient armClient = new ArmClient(new DefaultAzureCredential());

/* If you had multiple subscriptions and wanted to specify a one uncomment this line out:

string subscriptionId = "your-specific-subscription-id";

ResourceIdentifier resourceIdentifier = new($"/subscriptions/{subscriptionId}");

SubscriptionResource subscription = armClient.GetSubscriptionResource(resourceIdentifier);

*/

// If you want you want to use your default subscription:

SubscriptionResource subscription = await armClient.GetDefaultSubscriptionAsync();

Console.WriteLine($"Here is my subscription display name: {subscription.Data.DisplayName}");

Console.WriteLine($"Here is my subscription ID: {subscription.Data.SubscriptionId}");

//Create a resource group

ResourceGroupCollection resourceGroups = subscription.GetResourceGroups();

string resourceGroupName = "myRgName";

AzureLocation location = AzureLocation.WestUS;

ResourceGroupData resourceGroupData = new ResourceGroupData(location);

ArmOperation<ResourceGroupResource> operation = await resourceGroups.CreateOrUpdateAsync(WaitUntil.Completed, resourceGroupName, resourceGroupData);

ResourceGroupResource resourceGroup = operation.Value;

Console.WriteLine("Your resource group was created!");

//Create a storage account

StorageAccountCollection storageAccounts = resourceGroup.GetStorageAccounts();

string storageAccountName = "mystorageacct" + Guid.NewGuid().ToString("N").Substring(0, 8);

StorageSku sku = new StorageSku(StorageSkuName.StandardLrs);

StorageKind kind = StorageKind.StorageV2;

var parameters = new StorageAccountCreateOrUpdateContent(sku, kind, location);

ArmOperation<StorageAccountResource> storageOp = await storageAccounts.CreateOrUpdateAsync(WaitUntil.Completed, storageAccountName, parameters);

StorageAccountResource storageAccount = storageOp.Value;

Console.WriteLine($"Your storage account '{storageAccountName}' was created!");

//Create a blob container

BlobServiceResource blobService = await storageAccount.GetBlobService().GetAsync();

BlobContainerCollection containers = blobService.GetBlobContainers();

string containerName = "my-container";

BlobContainerData containerData = new BlobContainerData();

ArmOperation<BlobContainerResource> containerOp = await containers.CreateOrUpdateAsync(WaitUntil.Completed, containerName, containerData);

BlobContainerResource container = containerOp.Value;

Console.WriteLine($"Your blob container '{containerName}' was created!");

Now, I can't imagine in a production environment anyone would take the time to do this raw like this so I just want to emphasize that I only did this to polish up on my C# skills. Normally I'd spin something like this up using AZ CLI or if I'm going to use it multiple times I'd make an ARM template. Of course, you can replace whatever details you want from my code above.

Here's some links to some of the documentation I used as a reference:

Azure Resource Manager SDK for .NET - Azure for .NET Developers | Microsoft Learn

- Verify existing resources. I didn't implement anything in the code to confirm the creation of the resources, but in this case I have the Azure Portal open to verify.

Creating our Azure Function in Visual Studios

This time it's a little more justified to be using Visual Studios. We are going to be using the Function Template with an HTTP trigger to start us off for this one.

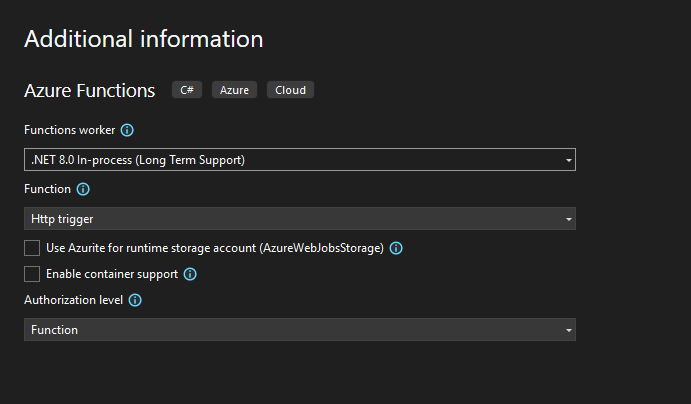

- Create new project using Function Template with an HTTP Trigger using the .NET 8.0 In-process (Long Term Support).

- Here's my code:

using System;

using System.IO;

using System.Threading.Tasks;

using Microsoft.AspNetCore.Mvc;

using Microsoft.Azure.WebJobs;

using Microsoft.Azure.WebJobs.Extensions.Http;

using Microsoft.AspNetCore.Http;

using Microsoft.Extensions.Logging;

using Newtonsoft.Json;

namespace httpfunc_nonisolated

{

public static class Function1

{

[FunctionName("Function1")]

public static async Task<IActionResult> Run(

[HttpTrigger(AuthorizationLevel.Function, "get", "post", Route = null)] HttpRequest req,

[Blob("my-container/{rand-guid}.txt", FileAccess.Write, Connection = "AzureWebJobsStorage")] Stream outputBlob,

ILogger log)

{

log.LogInformation("C# HTTP trigger function processed a request.");

string name = req.Query["name"];

string requestBody = await new StreamReader(req.Body).ReadToEndAsync();

dynamic data = JsonConvert.DeserializeObject(requestBody);

name = name ?? data?.name;

string responseMessage = string.IsNullOrEmpty(name)

? "This HTTP triggered function executed successfully. Pass a name in the query string or in the request body for a personalized response."

: $"Hello, {name}. This HTTP triggered function executed successfully.";

// Write response to blob

using var writer = new StreamWriter(outputBlob);

await writer.WriteAsync(responseMessage);

return new OkObjectResult(responseMessage);

}

}

}

What this code is doing is it's going to accept HTTP request to the function URL, and write the accepted data to the container that we created earlier.

Verifying Functionality

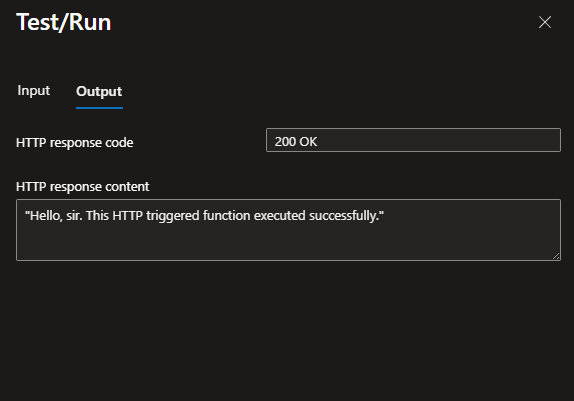

There are two ways I tested functionality for the function, the first is to go on the function on the Azure Portal and navigating to the "Test/Run" feature. Once there you can put in some values for a GET or POST request and verify that it gets process to your container.

You will need to allow CORS.

Second method you can do is to use the curl utility as listed below:

curl -X POST \

"https://<function-name>.azurewebsites.net/api/Function1?code=<function-key>" \

-H "Content-Type: application/json" \

-d "{\"name\": \"<your-name>\"}"

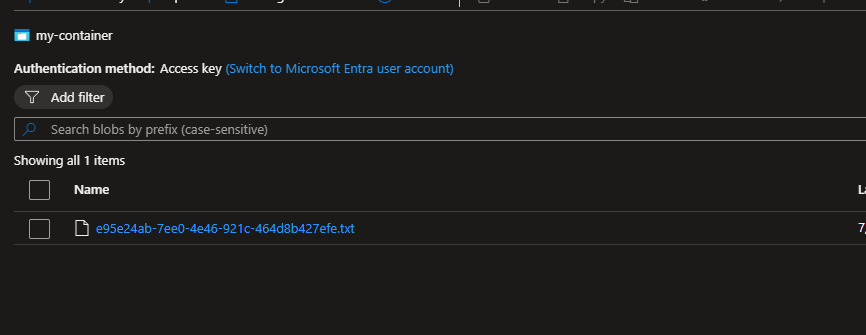

The processed data will appear as a random name just like we programmed it to in our code.

That's how you can use an HTTP Trigger with an output Blob binding to process data into a Blob Container.